- I'm creating a web scraper with golang and I just wanted to ask some questions about how most of them work. For example, how does Googlebot not use a lot of bandwidth when scraping because you have to go to each URL to get data and that can be thousands of URLs so not only will that take bandwidth, it will also take a lot of time.

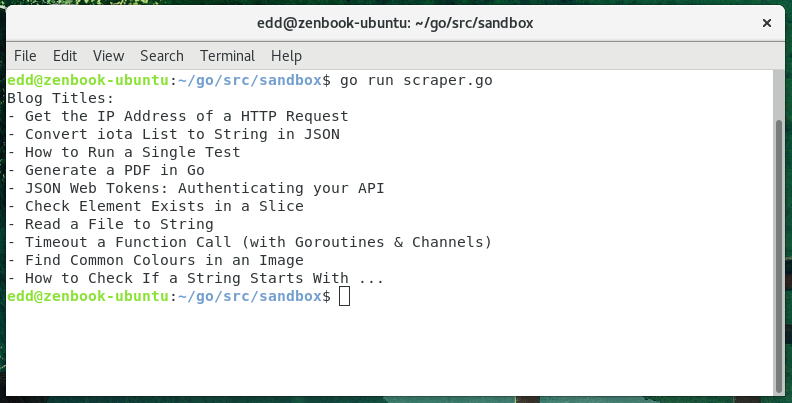

- I have previously written a post on scraping Google with Python. As I am starting to write more Golang, I thought I should write the same tutorial using Golang to scrape Google. Why not scrape Google search results using Google’s home grown programming language. Imports & Setup.

- Web Scraping is a process of extracting content from a website. Since any website is but HTML code, web scraper parses and extracts values from this underlying HTML code. Lets set things up to begin with. I create a folder named webscraper under the folder in which I.

Concurrent programming is a very complicated field, and Go makes it pretty easy. Go is a modern language which was created with concurrency in mind. On the other hand, Python is an older language and writing a concurrent web scraper in Python can be tricky, even if Python has improved a.

I have been flirting with go for a few weeks now and I built a simple forum-like website using gin which is a popular web framework for golang. After building the application, I was satisfied with how much I was able to learn about the language so I decided to do another little project with it. While I was browsing the web mindlessly(like most of us do), I stumbled upon a comment that talked about web scrapping in python then an idea popped in my mind, why not scrape the frontpage of a popular online forum then use the data to populate my own database. Now, scraping a website is not illegal, but it’s good you should know what you can and cannot scrape from a website. Many websites have a robots.txt file which gives such information. While there are tons of web scrapping tutorials on the web mostly in python, I felt there weren’t enough of them in go so I decided to write one. I did my research and found out an elegant golang framework for scraping websites called colly, and with this tool I was able to scrape the frontpage of a popular Nigerian forum called Nairaland.

The Go language has a ton of hype around it as it’s relatively new, the syntax is relatively easy to pick up as compared to other statically typed languages, it is very fast and natively supports concurrency which makes it a language of choice for many in building cloud services and network applications. We can leverage this speed to scrape websites in a fast and easy way.

Web Scraping

Web scraping is a form of data extraction that basically extracts data from websites. A web scraper is usually a bot that uses the HTTP protocol to access websites, extract HTML elements from them and use the data for various purposes.I’ll be sharing with you how you can scrape a website with minimal effort in go, let’s go 🚀

First, you will need to have go installed on your system and know the basics of the language before you can proceed.We’ll start by creating a folder to house our project, open your terminal and create a folder.

Then initialize a go module, using the go toolchain.

Replace the username and project name with appropriate values, by now we should have two files in our folder called go.mod and go.sum, these will track our dependencies.Next we go get colly with the following command.

Then we can get our hands dirty. Create a new main.go file and fire up your favorite text editor or IDE.

The above is the data structure we will be storing a single post in, it will contain necessary information about a single post. This was all I needed to populate my database, I was not interested in getting the comments since we all know how toxic the comments section of forums can be :).

We need to call the NewCollector function to create our web scrapper, then using CSS selectors, we can identify specific elements to extract data from. The main idea is that we target specific nodes, extract data, build our data structure and dump it in a json file. After inspecting the nairaland HTML structure (which I think is quite messy), I was able to target the specific nodes I wanted.

The OnHTML method registers a callback function to be called every time the scrapper comes across an html node with the selector we passed in. The above code visits every link of frontpage news.

What is happening here is that when we visit each link to a frontpage news, we extract the title, url, body and author name using CSS selectors to identify where they are located, we then build up our post struct with this data and append it to our slice. The OnRequest and OnResponse functions registers a callback each to be called when our scrapper makes a request and receives a response respectively. With this data at our disposal, we can then serialize it into json to be dumped on disk. There are other storage backends you can use if you want to do something advanced, checkout the docs. We then make a call to c.Visit to visit our target website.

We use the standard library’s json package to serialize json then write it to a file on disk, and voila we have written our first scrapping tool in golang, easy right?. Armed with this tool, you can conquer all the web, but remember to check the robots.txt file which tells you what data you can scrape and how to handle the data. You can read more about the robots file here, and remeber to visit the docs to learn more there’s a ton of great examples you can follow along there. Cheers ✌️

Thank you for reading

Today, we’re looking at how you can build your first web application in Go, so open up your IDEs and let’s get started.

GoLang Web App Basic Setup

We’ll have to import net/http, and setup our main function with placeholders.

http.HandleFunc is a function that handles the paths in a url. For example http://www.golangdocs.com/2020/08/23.

- Here, the index page is linked to the homepage of our site.

- ListenAndServe listens to the port in the quotes, which is 8000. Once we run our web app, you can find it at localhost:8000.

Next, we need to configure the index page, so let’s create that:

Similar to if you’ve ever worked on Django, our function for index page takes input as a request to a url, and then responds with something. Replace the inside of the index_page function with anything of your choice (the w implies we want to write something), say,

Save this file as “webApp.go”, and we can run the following command in the terminal:

The following page comes up at localhost:8000 –

ResponseWriter Output HTML too

With Golang ResponseWriter, it is possible to directly format the text using HTML tags.

and that gives us the desired output:

This is still just one page, but say you wanted to make your site so that it will not return an error when you type localhost:8000/something_else.

Let’s code for that !

Output:

Voila !

Gorilla Mux for ease of web app development

Let me introduce you to a package named Gorilla Mux, which will make your web development much easier. So first, let’s install it using go get in the terminal.

We’ll do a few changes to our above code and use gorilla mux router instance instead of our indexHandler:

GoLang web application HTML templates

The hard coded design is quite plain and unimpressive. There is a method to rectify that – HTML templates. So let’s create another folder called “templates”, which will contain all the page designs.

We’ll also add a new file here called “index.html”, and add something simple:

Let’s switch back to our main .go file, and import our “html/template” package. Since our templates must be accessible from all handlers, let’s convert it to a global object:

Now we need to tell golang to parse our index.html for the template design and instantiate into our templates object:

Then modify the indexPage handler to contain:

And now if we run it, we’ll have exactly what we wanted.

Using Redis with Go web app

As a brief introduction to Redis, which we’ll be using as our database, they describe themselves best:

Redis is an open source (BSD licensed), in-memory data structure store, used as a database, cache and message broker. It supports data structures such as strings, hashes, lists, sets, sorted sets with range queries, bitmaps, hyperloglogs, geospatial indexes with radius queries and streams.

https://redis.io/So first download and install Redis: https://redis.io/download

Import the go-redis package and declare a global object:

Instantiate the redis client in main function:

and we need to grab some data from the redis server:

and then render into the index.html file:

We’re done configuring our html, which will take the elements from the comments array in our redis client, and place them in our web app.

So now we can open our command line and type in redis-cli to enter the redis shell, where we can push comments into the empty array:

Then if we run our app, you can see that it is now fetching the comments from the server. It would be able to do the same for, say, an AWS server.

Web Scraping In Golang For Mac

Ending Notes

Making a web application can take anywhere from a few days to a few months depending on the complexity of the application. For every button or functionality, there is help in the official Golang documentation, so definitely check that out.

Golang Web Development Tutorial

References